Road Network Operations

& Intelligent Transport Systems

A guide for practitioners!

Road Network Operations

& Intelligent Transport Systems

A guide for practitioners!

There are practical aspects which should be considered before starting an evaluation. In addition to the overall budget for implementing the ITS scheme, a budget should be allocated to evaluation – which will involve work before and after implementation. In some cases this will be considered as part of the implementation. In other cases the evaluation budget will be treated separately. In either case, the evaluation budget represents an investment in planning for future projects. It is important to consider:

The evaluation should focus on measuring the key aspect that the scheme is planning to change – not to pick up some general statistics or ‘feel good’ measures that may bear no relation to the effect of the ITS. For example, an evaluation of a real-time journey time information service which just asks users whether the ITS has had an impact on the quality of their journeys will only provide an indication of user satisfaction. Unless traffic flows and journey times are measured on the main route and alternative routes when incidents occur – the evaluation will not be able to determine the extent to which the ITS reduces the impact of incidents by spreading out traffic onto alternative routes. Nor will it be able to identify any detrimental effects to other roads, caused by diverted traffic.

Where possible, the ITS should be programmed to provide data which can be used to monitor its impacts. For example, real-time information at bus stops provides information on bus headways. Storing this data gives a record of intervals between bus services, which, for passengers, is a key aspect of reliability. In the case study on demand responsive transport in Prince William County USA, the service data was used to monitor performance automatically, reducing the cost of data collection and analysis. (See ITS for Demand Responsive Transport Operation, Prince William County USA)

Specific stakeholder groups may have particular requirements from a scheme – which influences the methods of evaluation, the budget, timing and scale of evaluation. It is important to understand these requirements and constraints at an early stage in planning the evaluation to determine to what extent they are met. The evaluation should be designed so that it is possible to identify how the benefits and costs of the ITS are distributed between the different stakeholders – who gains and who loses.

For example, as well as evaluating the impacts of ITS on the travelling public (the ‘end’ users), evaluation should consider the requirements of the operators and other staff of the organisations involved in delivering the ITS. These impacts can be evaluated by interviewing staff – and in some cases by using data on work patterns and completed tasks.

An evaluation of the impacts of teleworking on travel patterns in Hampshire not only evaluated the impacts on the employees concerned, but also included a survey of managers and colleagues to assess the impact on working patterns and productivity of the organisations for which the employees worked. Transport Research Laboratory, Transportation Research Group (Southampton University) and University of Portsmouth. 1999. Monitoring and evaluation of a teleworking trial in Hampshire. TRL Report 414. Transport Research Laboratory, Crowthorne.

An evaluation of cross-border traffic management strategies in The Netherlands included interviews with employees which identified ways in which the processes for implementing the strategies could be streamlined. Taale, H. 2003. Evaluation of Intelligent Transport Systems in The Netherlands. Transportation Research Board Annual Meeting, 2003. Washington DC.

Evaluation is specialist work. Some road network operators, road authorities and other organisations tasked with implementing ITS have limited capabilities within their organisation for monitoring and evaluation of ITS. However evaluation is not always complicated, and does not always have to be carried out by specialists. If there is a need to collect data, this can be done in-house, provided that it is done credibly using an objective approach and sound methods.

For most evaluations it is a good idea to use specialists the first time one is undertaken – so in-house staff can learn how it should be done. Formal training should also be given to enhance the learning experience. It is also worth employing specialists from time to time afterwards so that up-to-date methods can be introduced to in-house staff. In some circumstances it will be important that the evaluation is carried out by an independent organisation – to ensure that the results are credible (for example, in reporting back to funding agencies).

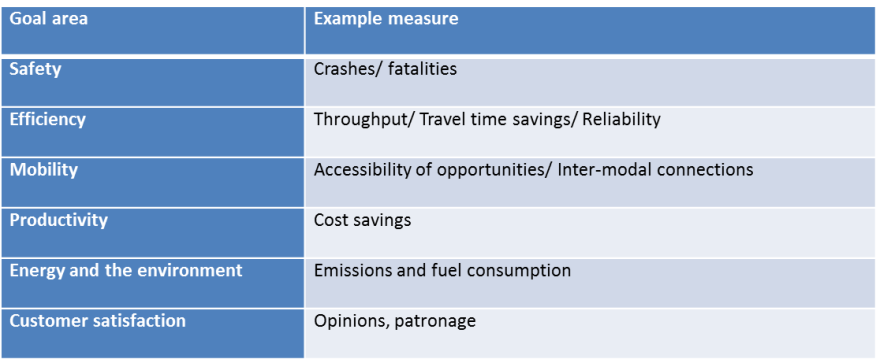

Affordability is often an issue. Whichever evaluation method is used, there may be insufficient budget to answer all questions related to the ITS. It then becomes necessary to decide which aspects of the evaluation have the highest priority. A Critical Factors Analysis can be useful at this stage. It looks at the different goals that a scheme is expected to address – and the key indicators needed to measure the extent to which they have been achieved. This is illustrated in the figure below.

Evaluation management includes planning and monitoring the work of evaluation to ensure its technical quality, cost-effectiveness and timeliness. Key elements in managing a successful evaluation are:

Information is a key element of ITS. ITS applications collect, combine, store and communicate data about the transport network, its users – and the stakeholders involved in providing services. In addition to operational data, monitoring and evaluation also requires data collection on individuals and organisations, their views and responses to the ITS. This can raise concerns about privacy. (See Legal and Regulatory Issues)

Those involved in planning, conducting and reporting on evaluation and monitoring activities need to ensure that data protection and privacy issues are considered and addressed – and that any requirements of data protection legislation are met. The US DOT’s ePrimer discusses data protection for ITS at some length and sets out strategies for mitigating privacy issues – which apply to monitoring and evaluation as well as operational data:

External Funding agencies are likely to have specific requirements which must be met by evaluation work, in addition to following best practice in designing and carrying out evaluations. These might relate, for instance to:

Evaluation results from other schemes are helpful when planning an evaluation. They may:

Care needs to be taken when considering the methods used and results obtained from elsewhere. The number of case studies of ITS evaluations in developing economies is limited and the temptation will be to look at results from developed economies which are more numerous and readily available – but they may not be appropriate to the context. User reactions and benefit valuation contexts can differ widely between different countries and regions with different social and economic profiles. The key issue to be addressed is whether the planned scheme is similar to the case studies in terms of its context, objectives and user base?