The complexities of transport and logistics can be approached by using systems engineering methodologies and user participation in the design work. The Road Network Operator needs to understand fully the total system structure, its dynamic characteristics and the role and responsibilities of its different road users. It is only then that a proper Human-Machine Interaction (HMI) design can be accomplished with positive user acceptance and operational success.

Analysis breaks problems into their parts and attempts to find the optimum solution. This process of breaking apart the whole, however, neglects the interrelationship between the parts – which can often be the root cause of the problem. The “systems” approach argues that in complex systems, the parts do not always provide an understanding of the whole. Rather, in a purposeful system, the whole gives meaning to the parts.

In order to tackle the sometimes contradictory interests of society and individuals, systems engineering methodologies can be applied. An ideal systems approach would start with the analyses of both the users and the problems which these user groups experience in traffic and transport. Procedures should guarantee that the results of these analyses are then used in the design process itself. The introduction of a user-oriented perspective to Intelligent Transport Systems (ITS) has similarities with the introduction of quality assurance procedures found in most industrial activities.

There are other industrial elements of the design process, which have to be included in the required human factors work. They focus on the practical realisation of basic ideas of problem solving which firstly have to be turned into functional concepts. A phase of implementation of these concepts into user-accepted solutions follows. This is often a trade-off between system features and system costs. The features (or benefits) are composed of usability, utility and likeability – whereas the efforts to learn and use, the loss of skills, new elements of risks introduced, and the financial costs constitute the cost elements. The use of human factors knowledge is crucial when high usability is sought.

The difficulty of identifying variables to reliably measure all these elements – is evident. The principle of user acceptance is an approach that clearly highlights all the diverging elements that could, would and should influence the design process. It can simply be stated that if the features are valued higher than the costs (using a weighted criteria/cost function) the solution is acceptable and will be purchased – and hopefully used. Market place stakeholders – such as end-users, customers and consumers must be involved.

New products or solutions in ITS are very seldom developed to solve or meet completely new problems and needs. Instead, better performance of already existing solutions is often the goal. It is also clear that old solutions and products will co-exist side-by-side with new ones. The penetration of new technology in society is often very slow and starts with people that can afford to be “modern” and the most up-to-date. Therefore the design must allow parallel operation of the old and the new, and some form of step-by-step development must be used. Other elements to consider are that the long term goals of systems for traffic and transport are usually societal, while the short term (market-oriented) are individual in that they try to create and meet an instant demand. This inherent conflict must be addressed and needs to be resolved at an early stage of the implementation process.

The Road Operator is well-placed to take a strategic and user-centric approach to the design and introduction of ITS. The interactive design process that is required may appear to take a great deal of time and resources but the benefits will become apparent and should quickly, outweigh the upfront costs.

The Road Operator has the opportunity to work with others on ITS innovations that promote and develop integration of transport and other services – to provide additional benefits.

Before introducing ITS or any new technology, or undertaking extensive field trials, it is invariably beneficial to pilot the system or service with a small group of users before more widespread deployment. This approach is part of user-centred design and allows any problems to be addressed, avoiding embarrassing and expensive mistakes.

Encouraging feedback from users and providing suitable mechanisms for monitoring use of ITS allows those responsible for ITS operations to better understand the experience of both occasional and experienced users. This may show how the use of ITS is changing over time (because of changes in other parts of the transport system or the environment) and provides advanced information about necessary modifications, including possibly re-design of the ITS.

ITS can, if introduced through a sufficiently wide systems approach, assist users – by providing a measure of integration within and across modes of transport (for example by combining tolling, parking and public transport ticketing). It can also assist with wider common service provision between transport and other urban facilities such as energy services. These different modes of transport and wider urban services typically have different ownership, governance and objectives which present barriers to integration and enhancement of user services.

The rationale behind the development of ITS is the need for high efficiency and quality in new and innovative transport services. The goal of these services is either to meet a certain need for the movement of people and goods – or to supply a specific endeavour (or activity) with the correct amounts of its necessary components at the time and at the location. These activities are called transport and logistics respectively. By implication, the design of these services will include modern information and communication technologies (ICT) for the exchange of information in real time and the result is an “intelligent” transport system.

From their everyday activities, people identify needs for movement between specific locations and become travellers. They engage in trip planning and, if successful, a trip plan will be created by linking a sequence of transport options to serve the journey – if necessary based on different transport means. These transport options can either be chosen from available information (in timetables, for example) or can be made known to someone (who can organise such options) as dynamic demands on transport. These travel demands and travel patterns are today normally captured by surveys and observations on a yearly basis to inform the production of static timetables.

The planning of a journey includes a matching exercise between the total travel demand and the future availability of vehicles to serve that demand and provide the transport service. The matching will be successful only if no disturbances in the traffic process occur and no characteristics of an open-loop control system are evident.

When technical systems such as ITS are put into a societal context, complexity emerges. The systems have to be designed in a cost-effective, efficient, safe and environmentally acceptable way. These objectives require design methods which make it possible to cope with system complexity.

ITS usually involves several human decision makers – and all the decision-making processes which require information about the ITS environment must be considered. Integration of network operations, transport processes and stakeholder perspectives is necessary. Analysis, design and evaluation of this complexity, and its impact on modern solutions for transport services, must be performed adequately.

Activities (or processes) in society which make use of a technical infrastructure and communications networks will be influenced by a large number of decision-makers and stakeholders. They are often geographically dispersed, have contradictory goals and act with different time horizons. In consequence, description and analysis of the interaction between technology and people in a specific class of systems can become so complex that specialist tools and techniques may be required. Expert help should be sought where necessary.

Three highly interrelated perspectives - networks, processes and stakeholders - can be usefully identified and, if combined in an operational way, can be helpful to the analysis, design and evaluation of ITS solutions.

The network perspective is focused on the links, nodes and elements for transport and communications which, when brought together, form the physical network and its structure. The use of technologies (and especially ICT) is important and adds complexity. This can be dealt with by breaking down the network into subnets or subsystems.

The process perspective is focused on the interaction between network components and the different flows of traffic or communications that can be identified in the processes. The dynamic characteristics are related to the transmission and transformation of information and related information channels. A matching between the time horizons of the control processes and the speed of information exchange is crucial for acceptable performance.

The stakeholder perspective is highly related to how ITS supports decision-making. The stakeholders have to interface with the processes and the networks by means of work stations, control panels, mobile or other in-vehicle units. The interface designs must be adapted to the mental models of the processes used by the stakeholders in their tasks. An appropriate filtering of information has to be introduced if the stakeholders are not to be overloaded, or disturbed by other processes or events outside of their control. A hierarchy of abstraction levels can be established. (See Users of ITS and Stakeholders )

Designers often build technical systems without completely understanding the tasks to be performed. Intelligent Transport Systems (ITS) need to be designed to be both useful and usable. Being usable is not enough if the system is not first useful. Users of ITS are diverse individuals – they do not all think the same way and they can be inconsistent and unpredictable. (See Diversity of Users)

It is not surprising that it is often difficult for the designers of technology to understand exactly the real needs of their potential users, how the technology will be used and how use will change as familiarity with the system or service develops. This is particularly the case for complex systems such as ITS in the broader transport context. The goal of good design is for complexity to be made to appear simple or intuitive to its users.

The complexity of ITS processes and their dynamics makes it essential to use sound design principles for robustness. For this reason, ITS require feedback of information from different process states using appropriate sensors. The feedback will also provide the input to adaptive control algorithms for decision-making by users – and make the processes less sensitive to disturbances. The complex nature of transport systems involving the interaction of many different systems and services is clear from the information feedback loops and the varied timescales used in the different decision-making processes.

Human error becomes virtually inevitable with the large number of different links and connections in the networks and processes of modern transport systems. In the transport domain one of the most critical situations is that of driver-vehicle interaction – as mistakes, slips and lapses in the primary driving tasks will have safety implications. (See Human Performance)

As well as reducing critical errors, there are many other practical reasons for involving users:

The overall message is that ITS should not be designed, developed or introduced without involving those who must use it. A holistic approach is required which acknowledges and accounts for the interactions between ITS and its users.

User centred design (UCD) is a well-tried methodology that puts users at the heart of the design process, and is highly recommended for developing ITS products and services that must be simple and straightforward to use. It is a multi-stage problem-solving process whereby designers of ITS analyse how users are likely to use a product or service – but also tests their assumptions through analysing actual user behaviour in the real world.

A key part of UCD is the identification and analysis of users’ needs. These have to be completely and clearly identified (even if they are conflicting) in order to help identify the trade-offs that often need to happen in the design process.

User-centred design (UCD) aims to optimise a product or service around how users can, want, or need to use the product or service – rather than forcing users to change their behaviour in order to meet their needs.

The ISO 9241-210 (2010) standard describes six key principles of UCD:

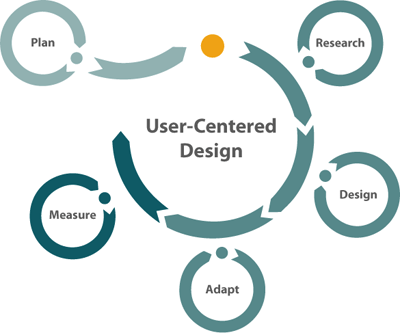

As shown in the figure below the main, but iterative, steps in UCD are Plan, Research, Design, Adapt, and Measure.

Steps in User Centred Design

A key part of the research stage for UCD is collecting and analysing user needs. Quality models such as ISO/IEC 25010 provide a framework for this activity.

Users include the following:

Some important questions related to user needs are:

User needs can also be defined according to the following list of attributes for an ITS:

The main advice is to adopt a user-centred design (UCD) process that begins with collecting and analysing user needs. The design of the ITS product or service can then be undertaken making use of all the advice and guidance provided below. The product or service should then be trialled and developed with actual users, taking account of their feedback. (See Piloting, feedback and monitoring)

Assistance from human factors professionals should be sought where necessary as there are many techniques available to collect user needs. Some of the main techniques include:

Assistance from human factors professionals should be sought where necessary. The following general guidelines are useful in compiling user needs and undertaking analysis for use in ITS design:

Analysis of tasks and errors is a hugely important activity for anyone seeking to understand how users interact with ITS or wishing to create the environment in which the interaction will take place – between users or between a user and an object/activity. Task analysis requires understanding and documenting all aspects of an activity in order to create or understand processes that are effective, practical and useful. Typically a task analysis is used to identify gaps in the knowledge or understanding of a process. Alternatively it may be used to highlight inefficiencies or safety-critical elements. In both cases the task analysis provides a tool with which to perform a secondary function – usually design-related. Error analysis is a specific extension of a task analysis and is about probing the activities identified by the task analysis – to determine how and why a user might make an error, so that the potential error can be designed out or the consequences can be mitigated.

The person conducting a task analysis first has to identify the overall task or activity to be analysed, and then to define the scope of the analysis. For example, it may be that they wish to examine the tasks performed by an operator at a monitoring station, but are only interested in what the operator does when they are seated at an active station. This would be the defined scope of the analysis.

Within this overall activity all the key subtasks that make up the overall activity must be identified. It is up to the person undertaking the analysis to decide what represents a useful and meaningful division of subtasks. This is something that comes partly from experience of performing such analyses and partly from understanding of the activity being analysed. Crucially, each subtask should have a definable start and end point.

With the subtasks created, the investigator then defines a series of rules and conditions which govern how each subtask is performed. For example, it may be that the monitoring station operator has at their workstation a series of monitoring systems (subtasks) where each subtask is distinct and separate – and it may be that the operator must perform the subtasks in a pre-defined sequence. The investigator must specify the rules governing how each subtask relates to the others. For example: “perform subtasks A and B alternately. At any time, perform subtask C – as and when required”.

The investigator would then look at each subtask in turn and perform a similar process to the process described above. The subtasks that make up subtask A would be identified and the rules governing their commission defined. This process of hierarchical subdivision continues until either there is no more meaningful division of tasks that can be performed – or the investigator has reached a level of understanding useful enough to inform the design.

Performing a task analysis allows a researcher to identify the following:

An error analysis builds on the task analysis and requires a similar approach. It looks at all the different ways in which an operator or outside agent could perform an error in each subtask identified. For example, one subtask for the operator of a monitoring station, may be to activate an alert system. Errors could include (among others), selecting the wrong incident response plan, pressing the wrong button, failing to push a lever all the way, looking at the wrong screen or dial, or activating the system at the wrong time. Typically such an analysis would be performed by considering each of a list of possible error mechanisms in turn, to avoid missing potential errors. Again, a combination of experience in conducting error analysis and an understanding of the workings of the overall activity are useful.

Before conducting a task or error analysis it is important to define what the output of the analysis is to be used for, as this will influence how the analysis is performed. For example, it may be that the investigator is only be interested in particular subtasks and activities – or that a particular level of detail is required, below which the analysis is useless and beyond which it is simply a waste of time and effort. Knowing the level of detail required is a key parameter as without this cut-off point, the analysis could go on almost indefinitely. A basic task analysis is a useful way for anyone to gain a clearer picture of any working environment. For more detailed analyses or situations where the task analysis is to provide the foundation for a larger set of activities, it is advisable to acquire the services of experienced practitioners.

The following is a basic overview of the key principles/stages:

break the overall task down into stages:

A task analysis is often useful in its own . It may also be useful to conduct a complimentary error analysis. Again it is best to use an experienced professional for large-scale analyses – but for a basic assessment, the following method can be applied:

Before introducing ITS or any new technology, or undertaking extensive field trials, it is invariably beneficial to pilot the system or service with a small group of users before more widespread deployment. This approach is part of User Centred Design (See User Centred Design) and allows any problems to be addressed, avoiding embarrassing and expensive mistakes.

Encouraging feedback from users and providing suitable mechanisms for monitoring use of ITS allows those responsible for its operation to better understand the experience of both occasional and frequent users. This may show how use of the ITS is changing over time (because of changes in other parts of the transport system or the environment) and provides advanced information about necessary modifications, including possibly re-design of the ITS (See Evaluation)

Even apparently simple tasks such as administering a questionnaire should be piloted. This is because the design of the questionnaire (and the management processes around the questionnaire) may be found deficient or ambiguous when exposed to actual users. Piloting is really important and should not be missed out.

Pilot studies should be well designed with clear objectives, clear plans for collecting and analysing results, and explicit criteria for determining success or failure. Pilot studies should be analysed in the same way as full scale deployments.

In general, the benefits of a pilot study can be identified under four broad headings:

Monitoring the use of ITS can take many forms. Some examples are:

Feedback is information that comes directly from users of ITS about the satisfaction or dissatisfaction they feel with the product or service. Feedback can lead to early identification of problems and to improvements.

When the ITS users are “internal”, such as traffic control room staff or on-site maintenance workers – encouraging feedback has to be addressed as part of the organisational culture. Ideally a “no blame” culture will exist that allows free expression about what works well and does not when ITS is incorporated within wider social and organisational settings. Some industries have an anonymous feedback channel to allow comment on systems and operations. Explicit and overt mechanisms to respond to feedback help encourage further contributions from ITS users.

Feedback from road users about ITS has to be carefully interpreted as it may relate to the wider transport system of which ITS is just the visible part. For example, a complaint about the setting on variable speed limit signs may arise because information about incident clearance is not speedily transmitted to a traffic control centre.

Many organisations publish a service level promise or “customer charter” and this may include feedback mechanisms. Road Operators may choose to implement feedback channels that are passive (such as publishing address/phone/email/web address) or adopt more active mechanisms (such as questionnaires and surveys).

Overall evaluation of ITS products and services is an important activity because the performance of the road user will depend crucially on the usability of the ITS. A benefit of improving the ease and efficiency of ITS technology, is increased user satisfaction. This can provide business advantages, particularly when users have a choice of ITS products or services. Poor usability of ITS, such as a poor user interface, or inadequate and misleading dynamic signage, may have safety implications in the road environment. Good usability will help to manage and predict road user behaviour and so help increase road network performance. For all these reasons, the performance of ITS in terms of its usability needs to be measured. (See Evaluation)

Usability measurement requires assessment of the effectiveness, efficiency and satisfaction with which representative users carry out representative tasks in representative environments. This requires decisions to be made on the following key points:

Steps in performance measurement:

The process of measuring human performance when the driver is interacting with ITS (particularly when using information and communication devices) can yield safety benefits – although there are challenges with this form of human performance measurement in this context.

Driver distraction (not focussing on the road ahead and the driving task) is an important issue for road safety. ITS products, such as information and communication devices, can greatly assist the driver (for example by indicating suitable routes) but ITS can also be an additional source of distraction. Distraction can make drivers less aware of other road users such as pedestrians and road workers and less observant of speed limits and traffic lights. (See Road Safety)

Measuring driving performance when interacting with ITS requires specialist equipment and expertise. Measurements made in laboratory settings and driving simulators may not be representative of real driving behaviour. This is because in real driving contexts drivers can choose when to interact (or not) with devices – and can modify their driving style to compensate to some extent for other demands on their attention. On-road measurements have to be designed to be unobtrusive and representative. Field Operational Tests (FOTs) can be designed accordingly and used to investigate both mature and innovative systems. FOTs can involve both professional and ordinary drivers according to the focus of investigation. A “naturalistic” driving study aims to unobtrusively record driver behaviour. Analysis of the drive record is used to identify safety-related events such as distraction, although the interpretation of results can be problematic and controversial.

The weight of scientific evidence points to distraction being an important safety issue. Many governments and Road Operators have sought to restrict drivers’ use of ITS while driving. There are different national and local approaches ranging from guidelines and advice – to bans on specific activities or functions (such as texting or hand-held phone use).

Usability goals for an ITS product or service should be expressed in terms of the usability attribute – such as easy to learn, efficient to use, easy to remember, few errors, subjectively pleasing. Deciding on the relative importance of these goals depends on the ITS and the context but it helps to focus future evaluations on the most important aspects.

Not all performance measurements have to be quantitative but some simple examples of performance metrics that might be of interest to Road Operators are:

It is also important to collect qualitative data. This can help explain the reasons behind a particular performance and may uncover the user's mental processes and beliefs about how the ITS operates (which may be correct or incorrect).

Addressing more strategic performance goals such as “safety” is a wider question in which the ITS has to be considered in the broader transport context. (See Road Safety)

Performance testing can be complex. Consult human factors professionals where necessary and:

Simple descriptive statistics (such as average values and spread) may be sufficient to characterise the particular performance metric – but: